接第01部分,本节用来说明C#语言的代码实现。

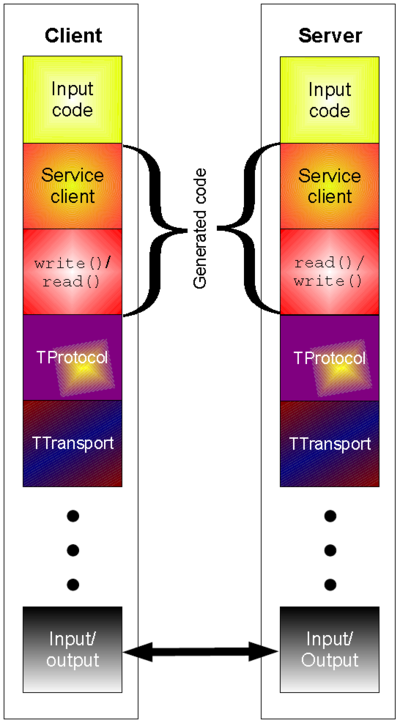

使用thrift生成cs代码之后,会生成两个cs文件,无论是Client还是Server都要包含这个文件。

首先是Server端:

1、新建一个Console项目,引用Thrift程序集中的Thrift.dll,项目中添加生成的两个cs文件。

2、新建一个类MyThriftServer,实现JustATest.Iface接口

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

namespace TestThrift

{

class MyThriftServer : JustATest.Iface

{

public string SayHelloTo(Person person)

{

return "Hello " + person.Name;

}

public int Add(int a, int b)

{

return a + b;

}

}

}

3、修改Program.cs

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Thrift.Protocol;

using Thrift.Server;

using Thrift.Transport;

namespace TestThrift

{

class Program

{

static void Main(string[] args)

{

TServerSocket serverTransport = new TServerSocket(1900, 0, false);

JustATest.Processor processor = new JustATest.Processor(new MyThriftServer());

TServer server = new TSimpleServer(processor, serverTransport);

server.Serve();

}

}

}

4、编译运行

然后是Client端:

1、新建一个Console项目,引用Thrift程序集中的Thrift.dll,项目中添加生成的两个cs文件。

2、修改Program.cs

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Thrift.Protocol;

using Thrift.Transport;

namespace TestThriftClient

{

class Program

{

static void Main(string[] args)

{

TTransport transport = new TSocket("localhost", 1900);

TProtocol protocol = new TBinaryProtocol(transport);

JustATest.Client client = new JustATest.Client(protocol);

transport.Open();

Person p = new Person();

p.Name="neohope";

Console.WriteLine(client.SayHelloTo(p));

Console.WriteLine(client.Add(1, 2));

transport.Close();

}

}

}

3、编译运行