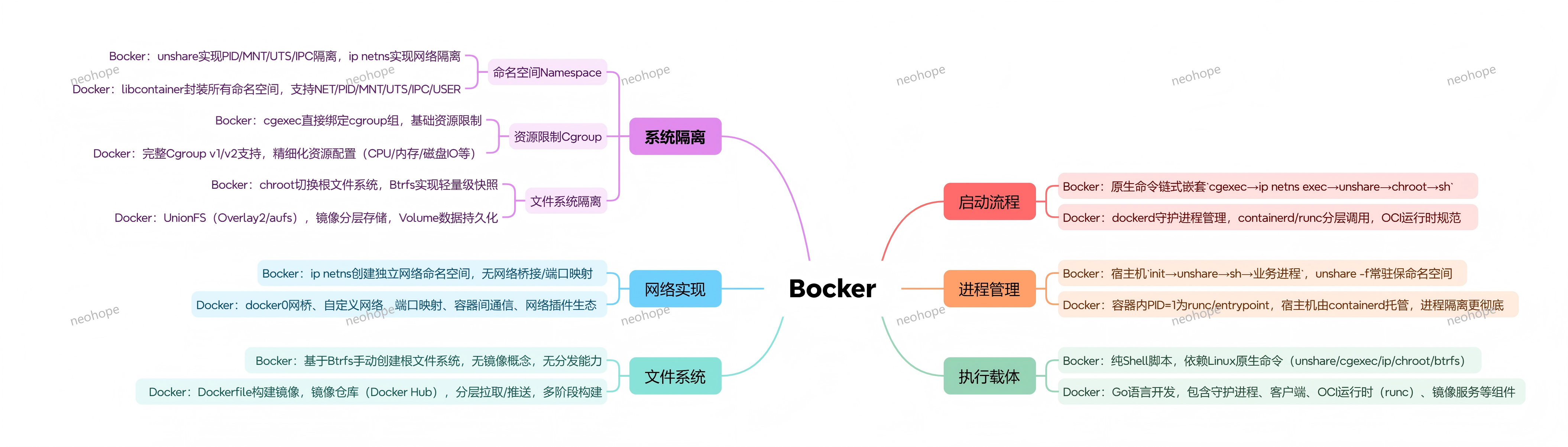

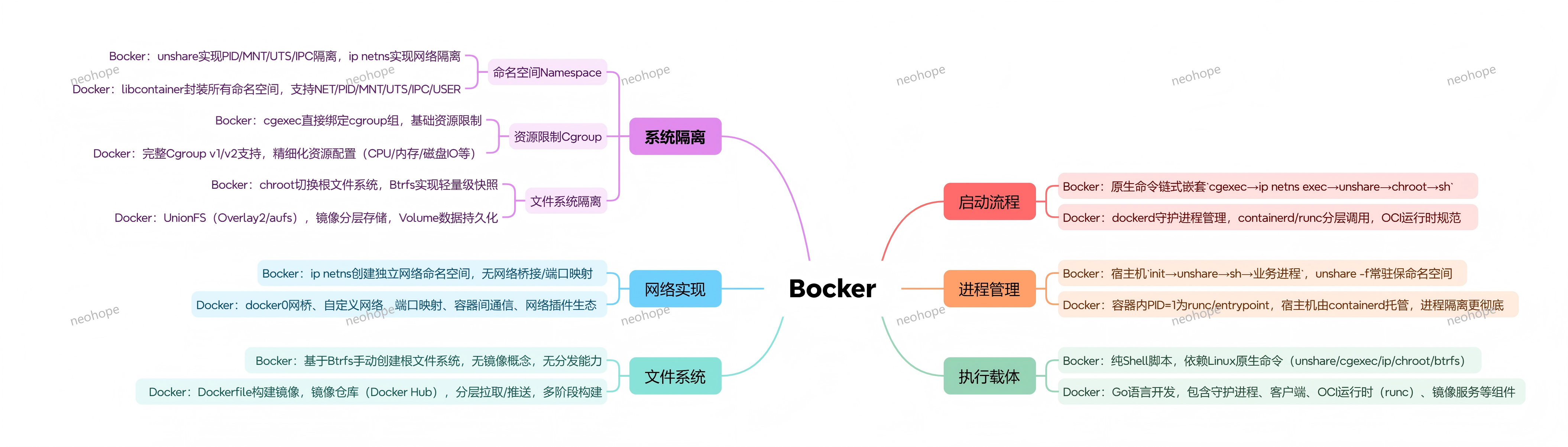

如果你对docker的实现原理比较感兴趣,建议读一下bocker的代码。

bocker用shell实现了docker的核心功能,代码就100来行,十分精炼。

我已经帮大家做好代码注释了,感兴趣可以读一下这个文件:

bocker.sh

PS:这是十年前的注释了,如果有错误或遗漏,欢迎指出。

Learn and share.

如果你对docker的实现原理比较感兴趣,建议读一下bocker的代码。

bocker用shell实现了docker的核心功能,代码就100来行,十分精炼。

我已经帮大家做好代码注释了,感兴趣可以读一下这个文件:

bocker.sh

PS:这是十年前的注释了,如果有错误或遗漏,欢迎指出。

换了3个云厂商,才把最后的例子跑完。。。

1、下载示例源码

git clone https://github.com/istio/istio.git Cloning into 'istio'...

2、生成镜像

cd istio/samples/helloworld/src ./build_service.sh Sending build context to Docker daemon 7.168kB Step 1/8 : FROM python:2-onbuild 2-onbuild: Pulling from library/python ...... sudo docker images REPOSITORY TAG IMAGE ID CREATED SIZE istio/examples-helloworld-v2 latest 2c7736ccfb8b 45 seconds ago 713MB istio/examples-helloworld-v1 latest 20be3b24eab7 46 seconds ago 713MB

3、镜像发到其他节点

# 备份镜像 sudo docker save -o hello1.tar 20be3b24eab7 sudo docker save -o hello2.tar 2c7736ccfb8b # 镜像发送到其他3个节点,并导入 # 对于每个节点做下面的操作 scp -i ~/hwk8s.pem hello1.tar root@192.168.1.229:~/ scp -i ~/hwk8s.pem hello2.tar root@192.168.1.229:~/ ssh -i 192.168.1.229 sudo docker load -i hello1.tar sudo docker tag 20be3b24eab7 istio/examples-helloworld-v1:latest sudo docker load -i hello2.tar sudo docker tag 2c7736ccfb8b istio/examples-helloworld-v2:latest exit

4、部署helloworld

kubectl apply -f helloworld.yaml service/helloworld created deployment.apps/helloworld-v1 created deployment.apps/helloworld-v2 created kubectl apply -f helloworld-gateway.yaml gateway.networking.istio.io/helloworld-gateway created virtualservice.networking.istio.io/helloworld created kubectl get pods kubectl get deployments

5、测试并生成流量

# 设置环境变量

# 这里IP要选用内网IP

export INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}')

export GATEWAY_URL=192.168.1.124:$INGRESS_PORT

# 测试一下,会发现两次访问会用不同版本的服务

curl http://$GATEWAY_URL/hello

Hello version: v1, instance: helloworld-v1-5b75657f75-9dss5

curl http://$GATEWAY_URL/hello

Hello version: v2, instance: helloworld-v2-7855866d4f-rd2tr

# 也可以在外网,通过浏览器浏览

# 这里IP要选用外网IP

# 同样的,刷新浏览器会在不同版本服务之间切换

http://159.138.135.216:INGRESS_PORT/hello

# 生成访问流量

./loadgen.sh

6、使用kiali查看相关信息

#kiali 20001 istioctl dashboard kiali #按上一节方法修改nginx配置内部端口 #重新加载配置 nginx -s reload # 浏览器访问 http://159.138.135.216:8000

7、其他dashboard信息也可以用相同方法访问

#grafana 3000 istioctl dashboard grafana #jaeger 16686 istioctl dashboard jaeger #kiali 20001 istioctl dashboard kiali #prometheus 9090 istioctl dashboard prometheus #podid 9876 istioctl dashboard controlz podid #podid 15000 istioctl dashboard envoy podid #zipkin istioctl dashboard zipkin #按上一节方法修改nginx配置内部端口 #重新加载配置 nginx -s reload # 浏览器访问 http://159.138.135.216:8000

1、首先,请根据前面k8s的教程,搭建一套可以运行的k8s环境

搭建Kubernetes环境01

| k8s-0001 | 159.138.135.216 | 192.168.1.124 |

| k8s-0002 | 159.138.139.37 | 192.168.1.229 |

| k8s-0003 | 159.138.31.39 | 192.168.1.187 |

| k8s-0004 | 119.8.113.135 | 192.168.1.83 |

2、下载并部署istio

#下载并部署istio curl -L https://istio.io/downloadIstio | sh - cd istio-1.5.2 export PATH=$PWD/bin:$PATH istioctl manifest apply --set profile=demo Detected that your cluster does not support third party JWT authentication. Falling back to less secure first party JWT. See https://istio.io/docs/ops/best-practices/security/#configure-third-party-service-account-tokens for details. - Applying manifest for component Base... ✔ Finished applying manifest for component Base. - Applying manifest for component Pilot... ✔ Finished applying manifest for component Pilot. Waiting for resources to become ready... Waiting for resources to become ready... Waiting for resources to become ready... Waiting for resources to become ready... Waiting for resources to become ready... - Applying manifest for component EgressGateways... - Applying manifest for component IngressGateways... - Applying manifest for component AddonComponents... ✔ Finished applying manifest for component EgressGateways. ✔ Finished applying manifest for component AddonComponents. ✔ Finished applying manifest for component IngressGateways. ✔ Installation complete #告知istio,对default空间下的pod自动注入Envoy sidecar kubectl label namespace default istio-injection=enabled namespace/default labeled

3、部署demo

#部署 kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml #查看pods情况 kubectl get pods NAME READY STATUS RESTARTS AGE details-v1-6fc55d65c9-kxxpm 2/2 Running 0 106s productpage-v1-7f44c4d57c-h6h7p 2/2 Running 0 105s ratings-v1-6f855c5fff-2rjz9 2/2 Running 0 105s reviews-v1-54b8794ddf-tq5vm 2/2 Running 0 106s reviews-v2-c4d6568f9-q8mvs 2/2 Running 0 106s reviews-v3-7f66977689-ccp9c 2/2 Running 0 106s #查看services情况 kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE details ClusterIP 10.104.68.235 <none> 9080/TCP 89s kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 31m productpage ClusterIP 10.106.255.85 <none> 9080/TCP 89s ratings ClusterIP 10.103.19.155 <none> 9080/TCP 89s reviews ClusterIP 10.110.79.44 <none> 9080/TCP 89s</none></none></none></none></none> # 开启外部访问 kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml gateway.networking.istio.io/bookinfo-gateway created virtualservice.networking.istio.io/bookinfo created #查看gateway情况 kubectl get gateway NAME AGE bookinfo-gateway 7s

4、设置ingress

# 查看是否配置了外部IP

kubectl get svc istio-ingressgateway -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-ingressgateway LoadBalancer 10.105.220.60 <pending> 15020:32235/TCP,80:30266/TCP,443:30265/TCP,15029:30393/TCP,15030:30302/TCP,15031:30789/TCP,15032:31411/TCP,31400:30790/TCP,15443:31341/TCP 5m30s</pending>

#使用node的地址作为host,和LB只需要配置一种

export INGRESS_HOST=47.57.158.253

#使用LB的地址作为host,和node只需要配置一种

export INGRESS_HOST=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

#配置http端口

export INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}')

#配置https端口

export SECURE_INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="https")].nodePort}')

#设置并查看外部访问地址

export GATEWAY_URL=$INGRESS_HOST:$INGRESS_PORT

echo http://$GATEWAY_URL/productpage

#此时就可以通过节点的ip地址来访问部署的实例了

#浏览器打开上面输出的地址

#http://47.57.158.253:30266/productpage

5、开启管理页面

#开始kaili

istioctl dashboard kiali

#安装nginx

#并设置反向代理

vi /etc/nginx/nginx.conf

http {

upstream backend {

# 代理的本地端口

server 127.0.0.1:20001;

}

server {

# 访问的外部端口

listen 8000;

location / {

proxy_pass http://backend;

}

}

}

# 通过反向代理的8000端口就可以访问kiali的管理界面了

# #http://47.57.158.253:8000

PS:

必须开放的TCP端口有:

| 8000 | nginx代理端口 |

| 8001 | k8s默认代理端口 |

| 30266 | bookinfo demo端口,会变更 |

常用命令行:

#帮助 oc help #诊断 oc adm diagnostics #修改policy oc adm policy #启动私有registry oc adm registry oc adm registry --config=admin.kubeconfig --service-account=registry oc adm registry --config=/var/lib/origin/openshift.local.config/master/admin.kubeconfig --service-account=registry #启动router oc adm router #开启关闭cluster oc cluster up oc cluster up --public-hostname=172.31.36.215 oc cluster down #删除 oc delete all --selector app=ruby-ex oc delete all --selector app=ruby-ex oc delete services/ruby-ex oc describe builds/ruby-ex-1 oc describe pod/deployment-example-1-deploy oc describe secret registry-token-q8dfm #暴露服务 oc expose svc/nodejs-ex oc expose svc/ruby-ex #获取信息 oc get oc get all oc get all --config=/home/centos/openshift3/openshift.local.clusterup/openshift-apiserver/admin.kubeconfig oc get all --selector app=registry oc get all --selector app=ruby-ex oc get builds oc get events oc get projects --config=/home/centos/openshift3/openshift.local.clusterup/openshift-apiserver/admin.kubeconfig oc get secrets #登录 oc login oc login -u developer oc login -u system:admin oc login -u system:admin --config=/home/centos/openshift3/openshift.local.clusterup/openshift-apiserver/admin.kubeconfig oc login https://127.0.0.1:8443 -u developer oc login https://172.31.36.215:8443 --token=tMgeqgvyGkpxhEH-MhP2AdChbTXCDDHzD-27JvZPfzQ oc login https://172.31.36.215:8443 -u system:admin #查看日志 oc logs -f bc/nodejs-ex oc logs -f bc/ruby-ex #部署app oc new-app centos/ruby-22-centos7~https://github.com/openshift/ruby-ex.git oc new-app deployment-example:latest oc new-app https://github.com/sclorg/nodejs-ex -l name=myapp oc new-app openshift/deployment-example oc new-app openshift/nodejs-010-centos7~https://github.com/sclorg/nodejs-ex.git #新建项目 oc new-project test #rollout oc rollout latest docker-registry #查看组昂头 oc statu oc status --suggest oc status -v #镜像打标签 oc tag --source=docker openshift/deployment-example:v1 deployment-example:latest #看版本 oc version #登录用户 oc whoami

1、通过镜像部署应用

#登录,用户名developer密码任意 ./oc login -u developer ./oc whoami #部署应用 #方法1 ./oc tag --source=docker openshift/deployment-example:v1 deployment-example:latest #方法2 ./oc tag docker.io/openshift/deployment-example:v1 deployment-example:latest ./oc new-app deployment-example:latest ./oc status curl http://172.30.192.169:8080 #更新应用 #方法1 ./oc tag --source=docker openshift/deployment-example:v2 deployment-example:latest #方法2 oc tag docker.io/openshift/deployment-example:v2 deployment-example:latest curl http://172.30.192.169:8080 #查看情况 ./oc get all NAME READY STATUS RESTARTS AGE pod/deployment-example-3-4wk9x 1/1 Running 0 3m NAME DESIRED CURRENT READY AGE replicationcontroller/deployment-example-1 0 0 0 18m replicationcontroller/deployment-example-2 0 0 0 15m replicationcontroller/deployment-example-3 1 1 1 4m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/deployment-example ClusterIP 172.30.82.203 <none> 8080/TCP 18m</none> NAME REVISION DESIRED CURRENT TRIGGERED BY deploymentconfig.apps.openshift.io/deployment-example 3 1 1 config,image(deployment-example:latest) NAME DOCKER REPO TAGS UPDATED imagestream.image.openshift.io/deployment-example 172.30.1.1:5000/myproject/deployment-example latest 4 minutes ago

2、构建镜像并部署应用

#登录 ./oc login https://IP:8443 -u developer #部署应用 ./oc new-app openshift/nodejs-010-centos7~https://github.com/sclorg/nodejs-ex.git --> Found Docker image b3b1ce7 (2 years old) from Docker Hub for "openshift/nodejs-010-centos7" Node.js 0.10 ------------ Platform for building and running Node.js 0.10 applications Tags: builder, nodejs, nodejs010 * An image stream tag will be created as "nodejs-010-centos7:latest" that will track the source image * A source build using source code from https://github.com/sclorg/nodejs-ex.git will be created * The resulting image will be pushed to image stream tag "nodejs-ex:latest" * Every time "nodejs-010-centos7:latest" changes a new build will be triggered * This image will be deployed in deployment config "nodejs-ex" * Port 8080/tcp will be load balanced by service "nodejs-ex" * Other containers can access this service through the hostname "nodejs-ex" --> Creating resources ... imagestream.image.openshift.io "nodejs-010-centos7" created imagestream.image.openshift.io "nodejs-ex" created buildconfig.build.openshift.io "nodejs-ex" created deploymentconfig.apps.openshift.io "nodejs-ex" created service "nodejs-ex" created --> Success Build scheduled, use 'oc logs -f bc/nodejs-ex' to track its progress. Application is not exposed. You can expose services to the outside world by executing one or more of the commands below: 'oc expose svc/nodejs-ex' Run 'oc status' to view your app. #暴露服务 ./oc expose svc/nodejs-ex route.route.openshift.io/nodejs-ex exposed #查看状态 ./oc status In project My Project (myproject) on server https://IP:8443 http://nodejs-ex-myproject.IP.nip.io to pod port 8080-tcp (svc/nodejs-ex) dc/nodejs-ex deploys istag/nodejs-ex:latest <- bc/nodejs-ex source builds https://github.com/sclorg/nodejs-ex.git on istag/nodejs-010-centos7:latest build #1 pending for about a minute deployment #1 waiting on image or update 2 infos identified, use 'oc status --suggest' to see details. #访问服务 curl http://nodejs-ex-myproject.127.0.0.1.nip.io

1、环境准备

操作系统Centos7.7

2、安装所需软件

sudo yum update sudo yum install curl telnet git docker

3、修改Docker配置,支持私有的registry

sudo vi /etc/docker/daemon.json

#内容如下

{

"insecure-registries" : [ "172.30.0.0/16"]

}

4、启动Docker

sudo systemctl start docker sudo systemctl status docker sudo systemctl enable docker

5、下载最新版本的openshift origin

https://github.com/openshift/origin/releases/ wget https://github.com/openshift/origin/releases/download/v3.11.0/openshift-origin-server-v3.11.0-0cbc58b-linux-64bit.tar.gz tar -xf openshift-origin-server-v3.11.0-0cbc58b-linux-64bit.tar.gz

6、开启cluster

#切换路径 cd openshift #--public-hostname这个参数,是其他节点访问的地址,也是网站的默认地址 sudo ./oc cluster up --public-hostname=172.31.36.215 Getting a Docker client ... Checking if image openshift/origin-control-plane:v3.11 is available ... Checking type of volume mount ... Determining server IP ... Checking if OpenShift is already running ... Checking for supported Docker version (=>1.22) ... Checking if insecured registry is configured properly in Docker ... Checking if required ports are available ... Checking if OpenShift client is configured properly ... Checking if image openshift/origin-control-plane:v3.11 is available ... Starting OpenShift using openshift/origin-control-plane:v3.11 ... I1112 14:25:54.907027 1428 config.go:40] Running "create-master-config" I1112 14:25:57.915599 1428 config.go:46] Running "create-node-config" I1112 14:25:59.062042 1428 flags.go:30] Running "create-kubelet-flags" I1112 14:25:59.521012 1428 run_kubelet.go:49] Running "start-kubelet" I1112 14:25:59.721185 1428 run_self_hosted.go:181] Waiting for the kube-apiserver to be ready ... I1112 14:26:21.735024 1428 interface.go:26] Installing "kube-proxy" ... I1112 14:26:21.735053 1428 interface.go:26] Installing "kube-dns" ... I1112 14:26:21.735061 1428 interface.go:26] Installing "openshift-service-cert-signer-operator" ... I1112 14:26:21.735068 1428 interface.go:26] Installing "openshift-apiserver" ... I1112 14:26:21.735089 1428 apply_template.go:81] Installing "kube-proxy" I1112 14:26:21.735098 1428 apply_template.go:81] Installing "openshift-apiserver" I1112 14:26:21.735344 1428 apply_template.go:81] Installing "kube-dns" I1112 14:26:21.736634 1428 apply_template.go:81] Installing "openshift-service-cert-signer-operator" I1112 14:26:25.755466 1428 interface.go:41] Finished installing "kube-proxy" "kube-dns" "openshift-service-cert-signer-operator" "openshift-apiserver" I1112 14:27:47.998244 1428 run_self_hosted.go:242] openshift-apiserver available I1112 14:27:47.998534 1428 interface.go:26] Installing "openshift-controller-manager" ... I1112 14:27:47.998554 1428 apply_template.go:81] Installing "openshift-controller-manager" I1112 14:27:51.521512 1428 interface.go:41] Finished installing "openshift-controller-manager" Adding default OAuthClient redirect URIs ... Adding sample-templates ... Adding centos-imagestreams ... Adding router ... Adding web-console ... Adding registry ... Adding persistent-volumes ... I1112 14:27:51.544935 1428 interface.go:26] Installing "sample-templates" ... I1112 14:27:51.544947 1428 interface.go:26] Installing "centos-imagestreams" ... I1112 14:27:51.544955 1428 interface.go:26] Installing "openshift-router" ... I1112 14:27:51.544963 1428 interface.go:26] Installing "openshift-web-console-operator" ... I1112 14:27:51.544973 1428 interface.go:26] Installing "openshift-image-registry" ... I1112 14:27:51.544980 1428 interface.go:26] Installing "persistent-volumes" ... I1112 14:27:51.545540 1428 interface.go:26] Installing "sample-templates/postgresql" ... I1112 14:27:51.545551 1428 interface.go:26] Installing "sample-templates/cakephp quickstart" ... I1112 14:27:51.545559 1428 interface.go:26] Installing "sample-templates/dancer quickstart" ... I1112 14:27:51.545567 1428 interface.go:26] Installing "sample-templates/django quickstart" ... I1112 14:27:51.545574 1428 interface.go:26] Installing "sample-templates/rails quickstart" ... I1112 14:27:51.545580 1428 interface.go:26] Installing "sample-templates/jenkins pipeline ephemeral" ... I1112 14:27:51.545587 1428 interface.go:26] Installing "sample-templates/sample pipeline" ... I1112 14:27:51.545595 1428 interface.go:26] Installing "sample-templates/mongodb" ... I1112 14:27:51.545602 1428 interface.go:26] Installing "sample-templates/mysql" ... I1112 14:27:51.545609 1428 interface.go:26] Installing "sample-templates/nodejs quickstart" ... I1112 14:27:51.545616 1428 interface.go:26] Installing "sample-templates/mariadb" ... I1112 14:27:51.545665 1428 apply_list.go:67] Installing "sample-templates/mariadb" I1112 14:27:51.545775 1428 apply_list.go:67] Installing "centos-imagestreams" I1112 14:27:51.552201 1428 apply_list.go:67] Installing "sample-templates/rails quickstart" I1112 14:27:51.552721 1428 apply_template.go:81] Installing "openshift-web-console-operator" I1112 14:27:51.553283 1428 apply_list.go:67] Installing "sample-templates/postgresql" I1112 14:27:51.553420 1428 apply_list.go:67] Installing "sample-templates/cakephp quickstart" I1112 14:27:51.553539 1428 apply_list.go:67] Installing "sample-templates/dancer quickstart" I1112 14:27:51.553653 1428 apply_list.go:67] Installing "sample-templates/django quickstart" I1112 14:27:51.553900 1428 apply_list.go:67] Installing "sample-templates/mysql" I1112 14:27:51.554028 1428 apply_list.go:67] Installing "sample-templates/jenkins pipeline ephemeral" I1112 14:27:51.554359 1428 apply_list.go:67] Installing "sample-templates/nodejs quickstart" I1112 14:27:51.554567 1428 apply_list.go:67] Installing "sample-templates/mongodb" I1112 14:27:51.554692 1428 apply_list.go:67] Installing "sample-templates/sample pipeline" I1112 14:28:06.634946 1428 interface.go:41] Finished installing "sample-templates/postgresql" "sample-templates/cakephp quickstart" "sample-templates/dancer quickstart" "sample-templates/django quickstart" "sample-templates/rails quickstart" "sample-templates/jenkins pipeline ephemeral" "sample-templates/sample pipeline" "sample-templates/mongodb" "sample-templates/mysql" "sample-templates/nodejs quickstart" "sample-templates/mariadb" I1112 14:28:28.673589 1428 interface.go:41] Finished installing "sample-templates" "centos-imagestreams" "openshift-router" "openshift-web-console-operator" "openshift-image-registry" "persistent-volumes" Login to server ... Creating initial project "myproject" ... Server Information ... OpenShift server started. The server is accessible via web console at: https://172.31.36.215:8443 You are logged in as: User: developer Password: <any value=""></any> To login as administrator: oc login -u system:admin

7、登录UI

https://172.31.36.215:8443/console system/admin

8、管理员访问

#登录 sudo ./oc login -u system:admin --config=/home/centos/openshift3/openshift.local.clusterup/openshift-apiserver/admin.kubeconfig #查看情况 sudo ./oc get all --config=/home/centos/openshift3/openshift.local.clusterup/openshift-apiserver/admin.kubeconfig NAME READY STATUS RESTARTS AGE pod/docker-registry-1-rvv44 1/1 Running 0 29m pod/persistent-volume-setup-88c5t 0/1 Completed 0 30m pod/router-1-x527s 1/1 Running 0 29m NAME DESIRED CURRENT READY AGE replicationcontroller/docker-registry-1 1 1 1 29m replicationcontroller/router-1 1 1 1 29m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/docker-registry ClusterIP 172.30.1.1 <none> 5000/TCP 30m service/kubernetes ClusterIP 172.30.0.1 <none> 443/TCP 31m service/router ClusterIP 172.30.190.49 <none> 80/TCP,443/TCP,1936/TCP 29m</none></none></none> NAME DESIRED SUCCESSFUL AGE job.batch/persistent-volume-setup 1 1 30m NAME REVISION DESIRED CURRENT TRIGGERED BY deploymentconfig.apps.openshift.io/docker-registry 1 1 1 config deploymentconfig.apps.openshift.io/router 1 1 1 config #查看项目清单 sudo ./oc get projects --config=/home/centos/openshift3/openshift.local.clusterup/openshift-apiserver/admin.kubeconfig NAME DISPLAY NAME STATUS default Active kube-dns Active kube-proxy Active kube-public Active kube-system Active myproject My Project Active openshift Active openshift-apiserver Active openshift-controller-manager Active openshift-core-operators Active openshift-infra Active openshift-node Active openshift-service-cert-signer Active openshift-web-console Active

9、查看容器清单

sudo docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES c347c56d2a7c docker.io/openshift/origin-hypershift@sha256:81ca9b40f0c5ad25420792f128f8ae5693416171b26ecd9af2e581211c0bd070 "hypershift opensh..." 14 seconds ago Up 13 seconds k8s_c_openshift-controller-manager-v25zz_openshift-controller-manager_8fd42f89-05fc-11ea-84e4-062e09fba9f6_1 7a079835fd87 docker.io/openshift/origin-hyperkube@sha256:ab28b06f9e98d952245f0369c8931fa3d9a9318df73b6179ec87c0894936ecef "hyperkube kube-sc..." 16 seconds ago Up 15 seconds k8s_scheduler_kube-scheduler-localhost_kube-system_498d5acc6baf3a83ee1103f42f924cbe_1 33edea80b969 docker.io/openshift/origin-hyperkube@sha256:ab28b06f9e98d952245f0369c8931fa3d9a9318df73b6179ec87c0894936ecef "hyperkube kube-co..." 18 seconds ago Up 17 seconds k8s_controllers_kube-controller-manager-localhost_kube-system_2a0b2be7d0b54a4f34226da11ad7dd6b_1 c5c4b4a30927 docker.io/openshift/origin-service-serving-cert-signer@sha256:699e649874fb8549f2e560a83c4805296bdf2cef03a5b41fa82b3820823393de "service-serving-c..." 20 seconds ago Up 19 seconds k8s_operator_openshift-service-cert-signer-operator-6d477f986b-jdhpp_openshift-core-operators_67c4fe2f-05fc-11ea-84e4-062e09fba9f6_1 9bf5456b9a97 docker.io/openshift/origin-hypershift@sha256:81ca9b40f0c5ad25420792f128f8ae5693416171b26ecd9af2e581211c0bd070 "hypershift experi..." 22 seconds ago Up 21 seconds k8s_operator_openshift-web-console-operator-664b974ff5-fr7x2_openshift-core-operators_97dcc42f-05fc-11ea-84e4-062e09fba9f6_1 66f27274adb4 openshift/nodejs-010-centos7@sha256:bd971b467b08b8dbbbfee26bad80dcaa0110b184e0a8dd6c1b0460a6d6f5d332 "container-entrypo..." About a minute ago Exited (0) 43 seconds ago s2i_openshift_nodejs_010_centos7_sha256_bd971b467b08b8dbbbfee26bad80dcaa0110b184e0a8dd6c1b0460a6d6f5d332_eaab5bb0 e4c52a772a9f be30b6cce5fa "/usr/bin/origin-w..." About a minute ago Exited (137) 2 seconds ago k8s_webconsole_webconsole-5594d5b67f-8l4b8_openshift-web-console_b5515962-05fc-11ea-84e4-062e09fba9f6_0 a778ec40561e openshift/origin-pod:v3.11 "/usr/bin/pod" About a minute ago Exited (0) 2 seconds ago k8s_POD_webconsole-5594d5b67f-8l4b8_openshift-web-console_b5515962-05fc-11ea-84e4-062e09fba9f6_0 e15062eac455 docker.io/openshift/origin-docker-registry@sha256:5c2fe22619668face238d1ba8602a95b3102b81e667b54ba2888f1f0ee261ffd "/bin/sh -c '/usr/..." 6 minutes ago Up 6 minutes k8s_registry_docker-registry-1-wmp47_default_9cfdaf50-05fc-11ea-84e4-062e09fba9f6_0 861c4c49572a openshift/origin-pod:v3.11 "/usr/bin/pod" 7 minutes ago Up 7 minutes k8s_POD_docker-registry-1-wmp47_default_9cfdaf50-05fc-11ea-84e4-062e09fba9f6_0 c6ebd5ad0bba docker.io/openshift/origin-hypershift@sha256:81ca9b40f0c5ad25420792f128f8ae5693416171b26ecd9af2e581211c0bd070 "hypershift experi..." 7 minutes ago Exited (255) 24 seconds ago k8s_operator_openshift-web-console-operator-664b974ff5-fr7x2_openshift-core-operators_97dcc42f-05fc-11ea-84e4-062e09fba9f6_0 cddd662f7d86 openshift/origin-pod:v3.11 "/usr/bin/pod" 7 minutes ago Up 7 minutes k8s_POD_openshift-web-console-operator-664b974ff5-fr7x2_openshift-core-operators_97dcc42f-05fc-11ea-84e4-062e09fba9f6_0 bdca70a2b67f docker.io/openshift/origin-hypershift@sha256:81ca9b40f0c5ad25420792f128f8ae5693416171b26ecd9af2e581211c0bd070 "hypershift opensh..." 7 minutes ago Exited (255) 23 seconds ago k8s_c_openshift-controller-manager-v25zz_openshift-controller-manager_8fd42f89-05fc-11ea-84e4-062e09fba9f6_0 9d671211845b openshift/origin-pod:v3.11 "/usr/bin/pod" 7 minutes ago Up 7 minutes k8s_POD_openshift-controller-manager-v25zz_openshift-controller-manager_8fd42f89-05fc-11ea-84e4-062e09fba9f6_0 8561b5a28a35 docker.io/openshift/origin-control-plane@sha256:da776a9c4280b820d1b32246212f55667ff34a4370fe3da35e8730e442206be0 "openshift start n..." 8 minutes ago Up 8 minutes k8s_kube-proxy_kube-proxy-z9622_kube-proxy_67da606f-05fc-11ea-84e4-062e09fba9f6_0 a240a1ac6457 docker.io/openshift/origin-control-plane@sha256:da776a9c4280b820d1b32246212f55667ff34a4370fe3da35e8730e442206be0 "openshift start n..." 8 minutes ago Up 8 minutes k8s_kube-dns_kube-dns-5xlrh_kube-dns_67da7e68-05fc-11ea-84e4-062e09fba9f6_0 2233dff0c201 docker.io/openshift/origin-service-serving-cert-signer@sha256:699e649874fb8549f2e560a83c4805296bdf2cef03a5b41fa82b3820823393de "service-serving-c..." 8 minutes ago Exited (255) 24 seconds ago k8s_operator_openshift-service-cert-signer-operator-6d477f986b-jdhpp_openshift-core-operators_67c4fe2f-05fc-11ea-84e4-062e09fba9f6_0 b622c82b5ef3 openshift/origin-pod:v3.11 "/usr/bin/pod" 8 minutes ago Up 8 minutes k8s_POD_kube-proxy-z9622_kube-proxy_67da606f-05fc-11ea-84e4-062e09fba9f6_0 9303e90d164c openshift/origin-pod:v3.11 "/usr/bin/pod" 8 minutes ago Up 8 minutes k8s_POD_kube-dns-5xlrh_kube-dns_67da7e68-05fc-11ea-84e4-062e09fba9f6_0 02f9425b8c7b openshift/origin-pod:v3.11 "/usr/bin/pod" 8 minutes ago Up 8 minutes k8s_POD_openshift-service-cert-signer-operator-6d477f986b-jdhpp_openshift-core-operators_67c4fe2f-05fc-11ea-84e4-062e09fba9f6_0 f279a265ee20 docker.io/openshift/origin-control-plane@sha256:da776a9c4280b820d1b32246212f55667ff34a4370fe3da35e8730e442206be0 "/bin/bash -c '#!/..." 9 minutes ago Up 9 minutes k8s_etcd_master-etcd-localhost_kube-system_c1cc5d01ac323a05089a07a6082dbe54_0 7376f93cadce docker.io/openshift/origin-hyperkube@sha256:ab28b06f9e98d952245f0369c8931fa3d9a9318df73b6179ec87c0894936ecef "hyperkube kube-sc..." 9 minutes ago Exited (1) 24 seconds ago k8s_scheduler_kube-scheduler-localhost_kube-system_498d5acc6baf3a83ee1103f42f924cbe_0 0d250ebb56eb docker.io/openshift/origin-hyperkube@sha256:ab28b06f9e98d952245f0369c8931fa3d9a9318df73b6179ec87c0894936ecef "hyperkube kube-co..." 9 minutes ago Exited (255) 23 seconds ago k8s_controllers_kube-controller-manager-localhost_kube-system_2a0b2be7d0b54a4f34226da11ad7dd6b_0 78f161557ef8 openshift/origin-pod:v3.11 "/usr/bin/pod" 9 minutes ago Up 9 minutes k8s_POD_kube-scheduler-localhost_kube-system_498d5acc6baf3a83ee1103f42f924cbe_0 adc1aa2a86d8 openshift/origin-pod:v3.11 "/usr/bin/pod" 9 minutes ago Up 9 minutes k8s_POD_kube-controller-manager-localhost_kube-system_2a0b2be7d0b54a4f34226da11ad7dd6b_0 62e223931bbc openshift/origin-pod:v3.11 "/usr/bin/pod" 9 minutes ago Up 9 minutes k8s_POD_master-etcd-localhost_kube-system_c1cc5d01ac323a05089a07a6082dbe54_0 9b30e2734938 openshift/origin-node:v3.11 "hyperkube kubelet..." 9 minutes ago Up 9 minutes origin

10、清理

#停止cluster sudo ./oc cluster down #清理配置 sudo rm -rf openshift.local.clusterup

1、安装docker

sudo apt-get update sudo apt-get install docker.io

2、运行Rancher

mkdir /home/ubuntu/rancker sudo docker run -d -v /home/ubuntu/rancker:/var/lib/rancher/ --restart=unless-stopped -p 80:80 -p 443:443 rancher/rancher:stable

3、登录

http://OUTTER_IP 默认用户名admin,需要设置密码 URL一般会填写内网地址,https://172.31.33.84

4、根据向导新建cluster,向导会帮忙生成对应语句

4.1、根据操作指引,在node1运行controlplane和worker

sudo docker run -d --privileged --restart=unless-stopped --net=host -v /etc/kubernetes:/etc/kubernetes -v /var/run:/var/run rancher/rancher-agent:v2.3.2 --server https://172.31.33.84 --token 4rjrgss2hq5w6nlmp4frptxqlq68zr7szvd9fd45pm7rfk968snsjk --ca-checksum 79f195454ab982ce478878f4e5525516ad09d6eadc4c611d4d542da9a7fc6c7e --controlplane --worker

4.2、根据操作指引,在node2运行etcd 和worker

sudo docker run -d --privileged --restart=unless-stopped --net=host -v /etc/kubernetes:/etc/kubernetes -v /var/run:/var/run rancher/rancher-agent:v2.3.2 --server https://172.31.33.84 --token 4rjrgss2hq5w6nlmp4frptxqlq68zr7szvd9fd45pm7rfk968snsjk --ca-checksum 79f195454ab982ce478878f4e5525516ad09d6eadc4c611d4d542da9a7fc6c7e --etcd --worker

5、在Rancher页面会看到两个节点加入成功

6、设置防火墙,让Rancher可以通过

我这边打开了2379和10250两个端口(之前K8S的端口已经设置过)

7、选择部署APP

比直接部署K8S方便了很多哦!

1、关闭swap

sudo swapoff -a

2、自动启用docker.service

sudo systemctl enable docker.service

3、cgroup切换为systemd

#参考https://kubernetes.io/docs/setup/cri/

sudo vi /etc/docker/daemon.json

#内容如下

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

4、一些有用的命令

kubeadm init

kubeadm reset

kubectl api-versions

kubectl config view

kubectl cluster-info

kubectl cluster-info dump

kubectl get nodes

kubectl get nodes -o wide

kubectl describe node mynode

kubectl get rc,namespace

kubectl get pods

kubectl get pods --all-namespaces -o wide

kubectl describe pod mypod

kubectl get deployments

kubectl get deployment kubernetes-dashboard -n kubernetes-dashboard

kubectl describe deployment kubernetes-dashboard --namespace=kubernetes-dashboard

kubectl expose deployment hikub01 --type=LoadBalancer

kubectl get services

kubectl get service -n kube-system

kubectl describe services kubernetes-dashboard --namespace=kubernetes-dashboard

kubectl proxy

kubectl proxy --address=' 172.172.172.101' --accept-hosts='.*' --accept-paths='.*'

kubectl run hikub01 --image=myserver:1.0.0 --port=8080

kubectl create -f myserver-deployment.yaml

kubectl apply -f https://docs.projectcalico.org/v3.10/manifests/calico.yaml

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta4/aio/deploy/recommended.yaml

kubectl delete deployment mydeployment

kubectl delete node mynode

kubectl delete pod mypod

kubectl get events --namespace=kube-system

kubectl taint node mynode node-role.kubernetes.io/master-

kubectl taint nodes --all node-role.kubernetes.io/master-

kubectl edit service myservice

kubectl edit service kubernetes-dashboard -n kube-system

kubectl get secret -n kube-system | grep neohope | awk '{print $1}')

本节采用yaml文件部署应用。

1、编写文件

vi hikub01-deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: hikub01-deployment spec: replicas: 1 selector: matchLabels: app: web template: metadata: labels: app: web spec: containers: - name: myserver image: myserver:1.0.0 ports: - containerPort: 8080

2、创建

kubectl create -f hikub01-deployment.yaml

3、暴露端口

kubectl expose deployment hikub01-deployment --type=LoadBalancer

4、测试

查看pods kubectl get pods -o wide #查看部署 kubectl get deployments -o wide #查看服务 kubectl get services -o wide #可以根据输出,在浏览器或wget访问服务 curl http://ip:port

5、清理

kubectl delete -f hikub01-deployment.yaml

本节,我们尝试部署一些服务。

1、首先,我们要准备自己的Docker镜像

1.1、准备文件

vi Dockerfile

FROM node:6.12.0 EXPOSE 8080 COPY myserver.js . CMD node myserver.js

vi myserver.js

var http = require('http');

var handleRequest = function(request, response) {

console.log('Received request for URL: ' + request.url);

response.writeHead(200);

response.end('Hello World!');

};

var www = http.createServer(handleRequest);

www.listen(8080);

1.2、测试myserver.js

nodejs myserver.js

1.3、创建镜像

#构建image sudo docker build -t myserver:1.0.0 . 1.4、测试container [code lang="shell"] sudo docker run -itd --name=myserver -p8080:8080 myserver:1.0.0 curl localhost:8080

2、导出镜像

docker images sudo docker save 0fb19de44f41 -o myserver.tar

3、导入到其他两个节点

scp myserver.6.12.0.tar ubuntu@node01:/home/ubuntu ssh node01 sudo docker load -i myserver.6.12.0.tar sudo docker tag 0fb19de44f41 myserver:6.12.0

3、用kubectl部署服务

#进行一个部署 kubectl run hikub01 --image=myserver:1.0.0 --port=8080 #暴露服务 kubectl expose deployment hikub01 --type=LoadBalancer #查看pods kubectl get pods -o wide #查看部署 kubectl get deployments -o wide #查看服务 kubectl get services -o wide #可以根据输出,在浏览器或wget访问服务 curl http://ip:port

4、清理

#删除服务 kubectl delete service hikub01 #删除部署 kubectl delete deployment hikub01 #删除部署 kubectl delete pod hikub01